Public cloud spending is heading toward a massive US $723 billion, but are teams really in control of their costs?

This growth is mostly driven by enterprises moving legacy workloads into AWS, building cloud-native applications, and experimenting with emerging technologies like generative AI. Yet as cloud adoption accelerates, so does the pressure on engineering and FinOps teams to understand whether their AWS environments are truly cost-efficient.

Many organizations measure overall cloud spend, but very few track their AWS Savings Plan KPIs that shed light on efficiency, commitment health, and utilization quality.

Today, where usage patterns shift weekly and architecture changes are frequent, teams need actionable AWS cost-savings metrics to know whether they are capturing expected discounts or leaving money on the table.

This article outlines the seven most important AWS Savings Plan KPIs every FinOps and DevOps team should track to build predictable, measurable cloud efficiency.

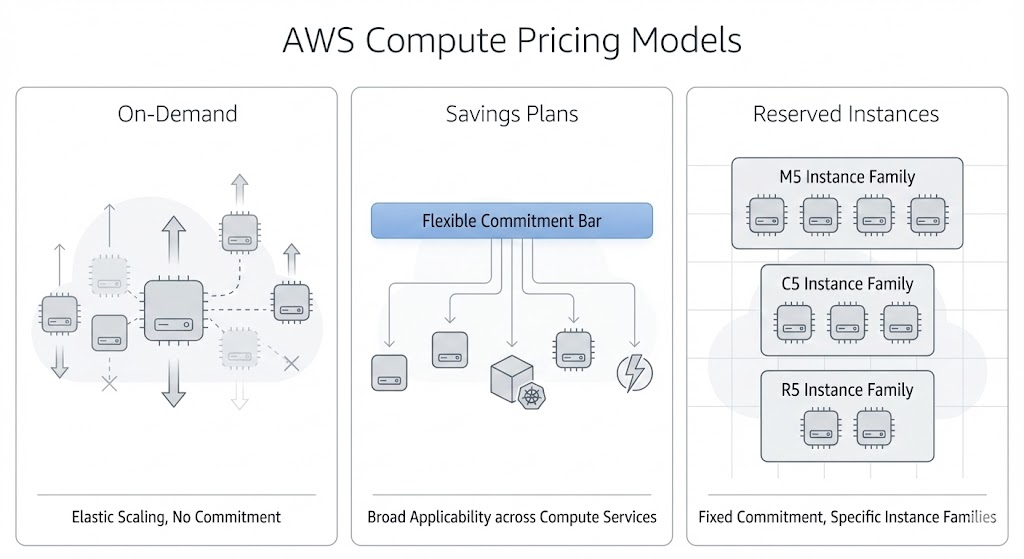

Before measuring AWS savings plan performance, it’s important to understand what you’re actually measuring. AWS offers multiple ways to reduce compute costs, but each mechanism introduces different levels of flexibility, commitment, and financial risk.

On-Demand pricing is the starting point for almost every workload. It offers complete flexibility where you can spin resources up, shut them down, and only pay for what you use. It’s ideal for experimentation and unpredictable patterns, but it’s also the most expensive way to operate long-running workloads. Most savings KPIs compare actual spend to what you would have paid using On-Demand rates.

AWS Savings Plans reduce cost when you commit to a certain amount of compute usage per hour for one or three years. They’re popular because they automatically apply across a wide range of compute services and instance families, which makes them easier to fit to evolving architectures.

For KPI tracking, AWS Savings Plans introduce two central questions:

Reserved Instances offer similar discounts but attach those discounts to specific instance families and configurations. They work well for stable, predictable workloads where teams know exactly what they will be running for the long term.

With RIs, it becomes especially important to monitor:

Also read: AWS Savings Plans vs Reserved Instances: Guide to Buying Commitments

Commitments don’t guarantee savings by themselves. They create the opportunity for savings and teams only capture those savings when their commitments match their real-world usage. Architectural shifts, seasonal patterns, autoscaling behavior, and right-sizing efforts can all change the shape of your environment month to month.

Without a KPI framework, it’s easy to end up with unused commitments, under-coverage, or inconsistent savings performance. So, these measures are about understanding efficiency, identifying leakage, and ensuring your commitments are working as expected. The seven KPIs in the next section provide a practical, data-driven way to track all of that.

Coverage is the foundational KPI for any AWS savings plan strategy. It measures how much of your compute usage is protected by discounted commitments (i.e., Savings Plans or Reserved Instances) versus how much is still billed at higher On-Demand rates. Even small pockets of uncovered usage can add up quickly, especially in environments that scale dynamically.

Coverage tells you whether you're fully leveraging commitment-based discounts. Low coverage typically means:

Extremely high coverage, on the other hand, can introduce risk if usage suddenly drops.

Most teams calculate coverage as the percentage of total compute usage that falls under Savings Plans or RIs during a specific period.

Coverage % = (Committed Usage ÷ Total Compute Usage) × 100

You can express this in terms of spend, hours, or normalized units depending on your internal reporting standards.

Let’s assume in a given month:

Coverage % = (7,200 ÷ 10,000) × 100 = 72%

This means about 72% of your workload benefited from commitment discounts, and the remaining 28% ran on On-Demand pricing.

Healthy coverage varies by environment:

The goal should be a consistent match between your commitments and your real usage over time.

Coverage is the first and clearest indicator of how well your commitment strategy aligns with real-world behavior. Every other KPI builds on this one.

While coverage tells you how much of your environment is protected by commitments, utilization tells you how effectively you’re using what you’ve already purchased. Commitment Utilization Rate measures the percentage of your Savings Plans or Reserved Instances that are actually being consumed by real workloads.

Commitments create the opportunity for lower costs, but only when workloads consume the capacity you agreed to. Low utilization means you’re effectively paying for unused discounts, and high utilization means your commitments are well aligned with real activity.

This KPI helps teams understand:

Utilization % = (Used Commitment ÷ Purchased Commitment) × 100

This can be measured in:

Let’s assume, you purchased a Savings Plan committing to $50/hour of compute usage. During this month, your actual compute usage billed under the plan is $42/hour. So,

Utilization % = ($42 ÷ $50) × 100 = 84%

This means you are only using 84% of what you committed to and 16% is the wasted spend for that period.

Utilization targets depend on environment type:

High utilization doesn’t always mean “buy more.” It just signals that your commitments are well matched to current patterns.

Low utilization is almost always a sign that commitments should be adjusted or that commitments were purchased based on outdated assumptions.

Also read: Save 30-50% on AWS in Under 5 Minutes: The Complete Setup Guide

Understanding the difference between expected savings and realized savings is essential for evaluating whether your AWS cost-optimization strategy is actually delivering the results you anticipated. Many teams assume that buying Savings Plans or Reserved Instances guarantees savings, but the financial outcome depends entirely on how consistently those commitments align with your actual usage.

Expected savings represent the theoretical discount AWS commitments can deliver under perfect usage conditions. Realized savings reflect what you actually saved after real-world fluctuations, like autoscaling, migrations, right-sizing, service changes, and usage dips.

This KPI helps teams answer questions like:

Realized Savings % = (On-Demand Cost Equivalent – Actual Cost) ÷ On-Demand Cost Equivalent × 100

Where:

Let’s assume for this month, you on-demand cost equivalent is $120,000 and the actual cost (after Savings Plans and RIs) i is $84,000

Realized Savings % = ($120,000 – $84,000) ÷ $120,000 × 100 = 30%

This means, you achieved 30% real savings after commitments were applied. If your procurement model projected 40% savings, you now have a 10-point gap between expectation and reality.

There’s no universal benchmark, but there are certain patterns which you can consider:

This KPI provides the clearest window into the financial performance of your savings strategy. The next KPI builds on this by measuring how much of the maximum possible discount you’re actually capturing.

Savings Efficiency measures how effectively your organization captures the maximum possible savings available from commitment-based discounts. Even if coverage and utilization appear healthy, it’s still possible to leave meaningful savings on the table.

This KPI gives teams a clearer view of how much discount value they’re truly capturing compared to what they could have captured with optimally sized commitments.

Savings Efficiency cuts through the noise of total spend and provides a clean indicator of discount performance. It helps teams understand:

Savings Efficiency % = Realized Savings ÷ Maximum Potential Savings × 100

Where:

Let’s assume that your maximum potential savings for this month is $80,000 and the realized savings is $56,000.

Savings Efficiency % = $56,000 ÷ $80,000 × 100 = 70%

This means, your organization captured 70% of the total possible discount value, and 30% of the available savings opportunity remains uncaptured.

There’s no universal benchmark, but there are certain patterns which you can consider:

While 100% efficiency is theoretically ideal, the goal should be to minimize inefficiency; not to eliminate it entirely.

Savings Efficiency helps teams identify if their current commitment strategy is optimized or structural adjustments are needed before the next renewal or purchase cycle.

Learn more: How to Choose Between 1-Year and 3-Year AWS Commitments

Burndown Forecast Accuracy measures how closely your predicted resource usage aligns with actual consumption over time. Since Savings Plans and Reserved Instances are commitments made months or years in advance, forecasting errors can directly impact savings outcomes.

When forecasts are too optimistic, you risk overcommitting; when they’re too conservative, you miss out on deeper discounts. This KPI helps teams evaluate how reliably their forecasting models support long-term commitment decisions.

EC2 fleets, container workloads, and service adoption patterns rarely stay static. Architecture changes, right-sizing efforts, seasonal patterns, and even organizational restructuring can all affect how much compute your environment consumes.

Accurate forecasting allows teams to:

There are several ways to measure forecast accuracy, but a simple and effective method is Percentage Error. It measures how close actual usage was to your predicted value.

Forecast Accuracy % = (1 – |Actual Usage – Forecasted Usage| ÷ Forecasted Usage) × 100

Let’s assume your forecast predicted 50,000 instance-hours for the month, and your actual usage came in at 46,000 instance-hours.

This means your forecast was 92% accurate, which is strong. Forecasts with >85–90% accuracy generally support healthy commitment decisions.

Forecast accuracy varies widely depending on workload type:

What matters is not a single accuracy score, but the trend. Improving accuracy over time signals maturity in both engineering and FinOps processes.

A reliable forecast is the backbone of long-term AWS savings strategy. The next KPI focuses on workload-level cost performance which is another critical dimension of savings measurement.

While most AWS savings plan KPIs focus on commitments and compute efficiency, Cost per Functional Unit zooms in on the cost of delivering value to the business. This KPI measures how much it costs to serve a single unit of work, such as a request, job, user session, pipeline run, or message processed regardless of the underlying architecture.

This shifts the conversation from infrastructure costs to workload performance, making it easier for engineering and product teams to understand whether efficiency is improving or declining over time.

It is possible for overall cloud spend to go down while workload efficiency actually gets worse, or vice versa. This KPI controls for noise by tying cost directly to output.

Teams use it to answer questions like:

The formula varies by workload, but the structure remains the same:

Cost per Functional Unit = Total Cost of Workload ÷ Total Units of Output

Where “units of output” may be:

The KPI adapts to any workload where output can be measured.

Let’s assume that a service processes 200 million API requests per month and its total monthly cost (compute + storage + data transfer) is $40,000.

Cost per Request = $40,000 ÷ 200,000,000 = $0.0002 per request

This means that if last month the service cost $0.00025 per request, then efficiency has improved. If the value rose, something regressed, so, maybe your performance tuning, scaling rules, or infrastructure configuration might need review.

“Good” is highly workload-specific, but the trends matter more than the raw numbers. Efficient workloads usually show:

Cost per functional unit becomes extremely powerful when trended over time because it highlights both operational improvements and architectural inefficiencies.

This KPI helps engineering and FinOps teams speak the same language, the cost of delivering value. The final KPI adds an often overlooked dimension—data transfer and retrieval.

While most AWS savings plan discussions focus on compute, data transfer and retrieval costs are often the silent contributors to rising cloud bills. In many organizations, these costs grow faster than compute itself, especially when architectures become more distributed, services communicate more frequently, or data pipelines expand.

This KPI helps teams measure how efficiently data moves across services, regions, and storage layers, and how these patterns impact their overall savings strategy.

Even if your compute commitments are perfectly optimized, unexpected data movement can cost the savings you expected to capture. The following are some common patterns to look for:

These costs don’t show up in commitment KPIs, but they directly influence the true efficiency of your workloads.

There are several ways to track this, but a simple, workload-inclined KPI is:

Data Transfer Cost per Unit = Total Data Transfer Cost ÷ Total Volume of Data Moved

You can apply this formula per workload, per environment, or per service.

Let’s assume a data processing pipeline transfers 150 TB per month, and the associated transfer and retrieval charges total $7,500.

Cost per TB = $7,500 ÷ 150 = $50 per TB

This means, if the cost per TB jumps from $50 to $70 next month, the increase may come from routing inefficiencies, unnecessary cross-AZ movement, aggressive replication settings, or new retrieval patterns.

Because data patterns vary widely, there isn’t a single benchmark. However, efficient environments typically show:

The goal shouldn’t just be to reduce data transfer, but also to understand when and why it happens, and how it impacts overall savings performance.

Data transfer and retrieval costs often represent the missing piece in savings analysis. Compute commitments may be optimized, but shifting data patterns can quietly chip away at realized savings and distort workload efficiency metrics.

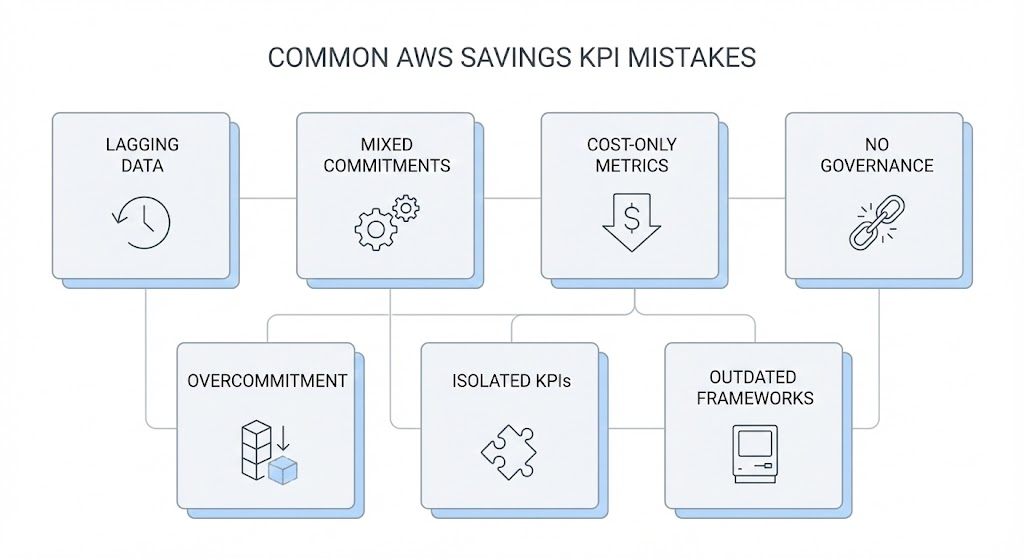

Below are some of the most common pitfalls FinOps and engineering teams encounter when tracking AWS savings plan, and why these mistakes can quietly cost otherwise solid optimization efforts.

Many teams base KPIs on delayed billing data, which means commitment decisions are tied to outdated usage patterns. In fast-changing environments, this leads to misaligned commitments, late detection of utilization drops, and savings projections that don’t match reality.

Savings Plans and Reserved Instances behave differently, yet some organizations group them together as a single metric. This masks important differences in flexibility, risk, and applicability, making coverage and utilization KPIs less accurate and harder to act on.

A workload costing less this month doesn’t automatically mean it became more efficient. Without metrics like cost per request or cost per job, organizations miss operational regressions and misinterpret savings that are really the result of traffic changes and not optimization.

KPIs fail when engineering, finance, and platform teams interpret them differently or make decisions in isolation. Without shared assumptions and coordinated ownership, commitment strategies drift, forecasts diverge, and savings outcomes become unpredictable.

Chasing high coverage or high utilization can push teams into overcommitment, especially in variable or evolving environments. Without a buffer for seasonal or architectural changes, organizations face underutilized commitments and financial exposure when usage dips.

Coverage, utilization, efficiency, and forecasting aren’t separate stories. Evaluating each KPI on its own hides the relationships between them, making it harder to see issues like misaligned commitments or architectural inefficiencies.

Cloud environments change constantly, but KPI frameworks often remain static. When teams don’t revisit what they measure or how they measure it, they end up tracking outdated metrics that no longer reflect how workloads behave or where costs originate.

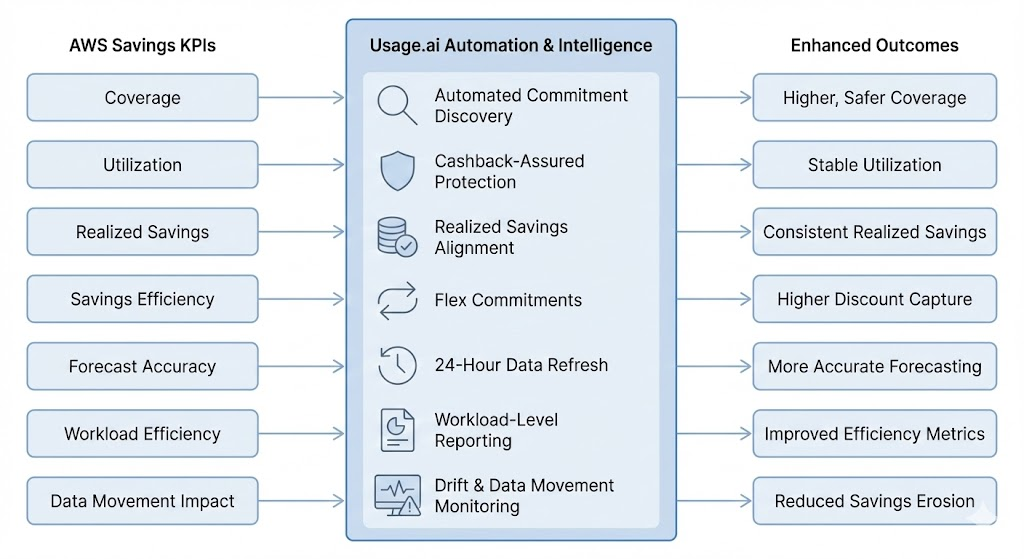

After establishing a strong KPI framework, the next step is ensuring those KPIs translate into consistent, predictable savings. This is where Usage.ai provides a measurable advantage.

By automating commitment decisions, refreshing recommendations daily, and offering cashback-assured coverage, Usage.ai strengthens the exact KPIs FinOps teams rely on to assess the health of their savings strategy.

Usage.ai continuously scans billing and usage to identify where Savings Plans or RIs can safely increase coverage. Instead of relying on lagging or static recommendations, Usage.ai updates insights every 24 hours, helping teams maintain coverage levels that match actual usage patterns.

One of Usage.ai’s most unique strengths is its cashback-assured commitment model. If commitments become underutilized due to shifting workloads, Usage.ai pays customers real cash back,effectively reducing the financial risk of utilization drops. This allows teams to achieve higher utilization safely, without fear of overcommitting.

Because Usage.ai charges only a percentage of realized savings, its incentives are aligned with actual KPI performance. Teams gain clarity into the true financial impact of their commitments, and Usage.ai helps ensure that realized savings remain close to expected savings over time.

Flex Commitments provide Savings Plan–like discounts without long-term lock-in. This gives teams discount coverage even as architectures shift, instance families change, or workloads scale unpredictably.

AWS-native recommendations can lag several days. Usage.ai’s 24-hour refresh cycle helps teams base forecasts and commitment decisions on the most current usage patterns available. This reduces the risk of overcommitting during usage peaks or undercommitting during dips.

With detailed reporting and transparency features, Usage.ai helps organizations break down savings and commitments by service, team, or workload. This enables more accurate cost-per-unit calculations and highlights where efficiency is improving or regressing.

By monitoring commitment coverage and usage alignment daily, Usage.ai helps reduce leakage that often emerges from architectural drift, such as shifts to new instance families, right-sizing, or increasing data transfer patterns that distort the savings picture.

What separates mature cloud cost practices from reactive ones is consistency. When these KPIs are reviewed regularly and trended over time, they provide a reliable foundation for smarter commitments.

As cloud environments continue to evolve, the organizations that treat savings as a measurable, data-driven discipline will be the ones that maintain both financial efficiency and architectural agility.

1. What are the most important AWS Savings Plan KPIs to track?

The essential AWS Savings Plan KPIs include: coverage percentage, commitment utilization rate, realized vs expected savings, savings efficiency, burndown forecast accuracy, cost per functional unit, and data transfer/retrieval cost KPIs.

2. How often should FinOps teams review AWS Savings Plan KPIs?

Most teams review Savings KPIs monthly, with weekly or daily checks for fast-changing environments. More dynamic workloads benefit from higher-frequency reviews to detect utilization drops or cost anomalies early.

3. What causes low utilization of Savings Plans or Reserved Instances?

Low utilization typically comes from architecture changes, workload right-sizing, usage drops, shifting to new instance families, or services migrating across regions or platforms. Any change that reduces the footprint of the resources your commitments were sized for can cause underutilization.

4. What is a good coverage percentage for AWS Savings Plans?

There’s no universal target, but many stable workloads maintain 70–90% coverage successfully. Highly variable or seasonal environments often choose more moderate coverage to avoid risk.

5. How do I know if I’m overcommitted on AWS?

You may be overcommitted if utilization consistently stays below expectations, realized savings trend downward, or workloads shrink without corresponding adjustment of commitments. Forecasting errors and unexpected architectural changes are common triggers.

6. Why do AWS forecasting errors impact savings?

Forecasting errors affect commitment sizing. If forecasts overestimate usage, you risk underutilized commitments. If they underestimate, you miss available discounts. High forecast accuracy leads to more predictable savings and fewer surprises in coverage or utilization.

7. Why do data transfer and retrieval costs matter for AWS savings?

Data transfer and S3 retrieval fees can consume a significant portion of cloud spend and often grow faster than compute usage. If these costs rise unexpectedly, they can reduce overall workload efficiency and distort savings metrics.

8. How does cost per functional unit improve AWS savings visibility?

Cost per functional unit ties cloud spend to output (e.g., cost per request or cost per job), making it easier to see whether efficiency is improving. It exposes patterns that raw spend cannot, like regressions after deployments or architecture decisions that increase cost per unit.

Share this post