.png)

If your company spends $5 million a year on cloud infrastructure, there’s a strong chance $1.25–$1.75 million of it is wasted. Cloud waste is structural and it compounds faster than most teams can manually correct it.

Cloud spending continues to grow more than 20% year over year, yet 67% of companies still struggle to accurately forecast their cloud costs. Meanwhile, traditional optimization efforts take six to nine months to fully implement by which time hundreds of thousands of dollars in preventable overpayment have already accumulated.

That’s why cloud cost optimization best practices in 2026 look very different from what they did just a few years ago.

Today, reducing cloud costs isn’t just about deleting idle instances or setting budgets, but it is also about systematically increasing commitment coverage, eliminating structural overpayment on on-demand usage, and refreshing decisions fast enough to keep pace with changing workloads across AWS, Azure, and GCP.

The most effective teams today follow a layered approach. They improve visibility, eliminate waste, and optimize commitment strategy. Only then 30–50% savings actually become possible.

In this guide, we’ll break down 18 cloud cost optimization best practices that move beyond dashboards and into measurable, sustained savings.

Cloud cost optimization is the practice of applying structured best practices to reduce on-demand cloud spend, increase discounted commitment coverage, and eliminate structural waste across AWS, Azure, and GCP without degrading performance or slowing engineering teams.

At its core, cloud cost optimization is about aligning cloud usage with financial efficiency. That means:

Most cloud bills are split into two categories:

Organizations that focus only on deleting idle resources typically reduce costs by 10–20%. Teams that also optimize commitment coverage, while managing risk can often achieve 30–50% reductions without changing application code.

Also read: How Cloud Cost Optimization Actually Works

In practice, cloud cost optimization falls into three distinct categories. Each category builds on the previous one and each unlocks a different level of savings.

The first layer of cloud cost optimization best practices focuses on visibility and accountability.

You cannot optimize what you cannot see. So, this category includes:

Visibility best practices expose inefficiencies, but they do not eliminate them on their own.

The second layer focuses on actively eliminating waste. These cloud cost optimization best practices target:

This is where most engineering-driven optimization happens. Waste reduction produces meaningful savings (often 10–20%) but it has a natural ceiling. Once obvious inefficiencies are cleaned up, incremental gains become smaller and harder to capture.

The third category is where true leverage lives. Instead of focusing on what to delete, this category focuses on how you purchase.

Every cloud bill has a baseline of predictable usage that includes steady-state compute, databases, and core workloads that run continuously. When that baseline runs on on-demand pricing, organizations overpay by design.

Commitment-focused cloud cost optimization best practices aim to:

This is where AWS Savings Plans, Reserved Instances, Azure Reservations, and GCP Committed Use Discounts come into play.

And it’s also where many teams hesitate because commitments introduce perceived lock-in risk. But when managed properly, commitment and coverage optimization can unlock 30–50% savings without requiring any code changes.

If you can’t explain your cloud bill in five minutes, you’re not optimizing and only guessing.

Remember that visibility best practices don’t reduce costs directly. They expose where structural overpayment lives. In most mid-market cloud environments ($1M–$10M annual spend), this layer unlocks 5–10% savings and prevents larger mistakes later.

Stop optimizing line items and focus on optimizing concentration.

In nearly every multi-cloud environment:

If you cannot answer:

You’re not ready for advanced optimization.

Field rule: If a service represents >20% of total spend, it deserves dedicated optimization modeling.

Most teams waste time trimming small services while ignoring structural compute exposure. Fix that first.

If tagging compliance falls below 90%, cost allocation reports become statistically unreliable. At that point:

Minimum viable tag set:

Field reality: Untagged resources are usually non-production, which is usually where idle waste hides.

If tagging isn’t automated at provisioning time (via Terraform, ARM, or policy), it will degrade within a quarter.

Most dashboards show total spend and that’s the wrong metric. Instead, look for what percentage of predictable workloads are still running on on-demand pricing.

If 40–50% of baseline compute runs on on-demand, you’re structurally overpaying, even if utilization looks healthy.

For example, if:

That’s a six-figure optimization opportunity before deleting a single instance.

Visibility must also include:

If you’re not tracking coverage weekly, you’re already behind.

An alert without ownership is noise. So, an effective anomaly detection requires:

Keep looking out for:

Field observation: Most anomalies are not catastrophic spikes. They are slow creep, i.e., 3–5% monthly growth that compounds into six figures annually.

If you execute these four visibility best practices correctly, you gain clarity. But clarity caps at 10% savings. You haven’t yet removed waste or addressed structural pricing.

That’s next.

Also read: GCP Cost Optimization Best Practices & Why They Don’t Scale

Once visibility is in place, the next layer of cloud cost optimization best practices focuses on eliminating structural waste. This is where most engineering-driven optimization happens and where many teams mistakenly believe they are “fully optimized.”

In reality, this category typically unlocks 10–20% savings. It is meaningful, but it has a ceiling. Waste reduction removes inefficiency. It does not address structural pricing; that comes later.

Idle resources accumulate continuously in any active cloud environment. Your dev environments can get spun up and forgotten or snapshots persist long after they’re needed.

Some common offenders include:

Operational rule: Any resource showing near-zero utilization for a sustained period should be reviewed within one week.

In mid-sized environments, idle resource cleanup alone often reveals mid-five-figure annual savings.

Overprovisioning is one of the most persistent forms of cloud waste. Instances are frequently sized for theoretical peak traffic rather than actual sustained demand. Because cloud infrastructure runs 24/7, you pay for that oversized capacity continuously, and not just during spikes.

When rightsizing, you should always:

In most production environments, average compute utilization falls below 40%. That signals excess headroom.

A practical target for steady-state production workloads is 50–65% utilization, with autoscaling absorbing bursts.

Kubernetes environments frequently obscure waste because cost attribution is more complex than traditional VM workloads.

Optimization requires visibility into:

Persistent node utilization below 50% is a red flag. Misconfigured resource requests often result in inflated cluster sizes.

Kubernetes cost optimization is about aligning provisioned capacity with real workload demand and eliminating unnecessary buffer layers. If Kubernetes represents a meaningful share of your compute spend, it must be optimized intentionally, and not treated as a black box.

Also read: 7 AWS Savings Plan KPIs for Better Cost Efficiency

Non-production environments rarely require continuous uptime. Yet many organizations run development and staging workloads 24/7 by default.

If non-production environments are only needed during business hours, scheduling them accordingly can materially reduce spend.

For example, a 40-hour workweek vs a 168-hour full week reduces runtime by more than 75%.

Automated shutdown policies for nights and weekends can produce immediate savings without performance trade-offs.

Storage costs grow gradually which makes them easy to ignore.

Common patterns include:

Data transfer costs also deserve scrutiny. Cross-region and cross-availability-zone traffic can silently inflate monthly bills. If inter-region data transfer exceeds 5–10% of total spend, architecture review is warranted.

Storage and data transfer optimization rarely produce headlines. But over time, they compound into significant recurring costs.

Executed well, category 1 and 2 cloud cost optimization best practices can drive measurable savings and improve operational hygiene.

But they do not address the largest structural cost driver in most cloud environments, which is on-demand pricing for predictable workloads.

That is where Category 3 begins.

This section focuses on commitment and coverage best practices which is the discipline of aligning baseline usage with discounted commitment instruments across AWS, Azure, and GCP. When executed correctly, this layer typically produces the largest savings impact.

Cloud providers offer multiple commitment models, each with different levels of flexibility and discount depth.

Across major clouds:

AWS

Azure

GCP

These differ in:

Before purchasing any commitment, you should be able to answer:

Commitment selection has a lot to do about balancing flexibility with financial efficiency, and not just maximizing the discount percentage.

Commitments should cover predictable baseline, not average monthly peaks.

Baseline modeling usually requires:

For example, if your monthly compute averages $250K but sustained minimum usage sits at $180K, you should target the $180K baseline commitment. If you commit against the full $250K, it will introduce unnecessary exposure.

A full 100% coverage is rarely optimal in any workload fluctuating environment. But, when it comes to stable environments:

You should be calculating Coverage as:

Coverage = Committed Spend ÷ Eligible Baseline Spend

.png)

If coverage is consistently below 60% in a stable environment, on-demand exposure is likely excessive. If coverage exceeds 90%, reassess your overcommitment risk.

Also read: How to Choose Between 1-Year and 3-Year AWS Commitments

Compute is typically the starting point, but it should not be the only focus. A structured layering approach often follows the sequence below:

Database commitments often involve greater modeling complexity, but they also represent consistent baseline usage in mature environments. Ignoring non-compute commitments can leave substantial savings unrealized.

Commitments should not be static. There are changes that require reassessment and those include:

Coverage that was appropriate six months ago may be misaligned today.

Under-commitment is often driven by perceived lock-in risk. Most teams hesitate to increase coverage because they fear:

This risk, however, can be managed in several ways:

Reducing downside exposure enables higher rational coverage levels. Without risk management, teams remain overly conservative and leave structural discounts unused.

Manual commitment management does not scale and requires the following:

When commitment analysis is performed quarterly, coverage drift accumulates silently. On the other hand, automated recommendation refresh (ideally on a daily cadence) allows organizations to:

Coverage alone is insufficient. Utilization matters too.

Here are some key metrics to consider:

While low utilization indicates overcommitment or workload drift, high on-demand exposure indicates undercommitment. But then, both reduce realized savings. Effective cloud cost optimization best practices require monitoring both sides of the equation.

Savings must be measurable and attributable. You should include the following in your reporting:

Optimization initiatives that cannot demonstrate realized financial impact lose executive support. Savings must show up clearly on the invoice and in financial reporting.

Also read: Google BigQuery Committed Use Discounts (CUDs) & Optimization Guide

The difference between moderate optimization and material savings usually comes down to one question: Are predictable workloads still running on on-demand pricing?

The following examples illustrate how applying disciplined coverage modeling, supported by automated commitment management produces structural savings, and how cloud cost optimization tool, Usage AI helped.

Order.co was running substantial AWS workloads across EC2, RDS, Redshift, and OpenSearch. Like many fast-growing organizations, the infrastructure itself was not inefficient. Instances were not wildly oversized. There was no obvious sprawl crisis.

The issue was that a significant portion of predictable workloads continued to run on on-demand pricing because traditional AWS Savings Plans required long-term commitments that limited flexibility.

As the company scaled, rising usage increased cloud spend. At the same time, the engineering team was focused on expanding product capabilities rather than managing commitment structures or modeling financial risk. Conservative commitment decisions left structural discounts unrealized.

Using Usage.ai’s Assured Commitments, Order.co gained access to savings comparable to a 3-year AWS Savings Plan while only being obligated for the first month. Through the Guaranteed Buyback program, underutilized commitments could be sold back, significantly reducing financial exposure.

With downside risk mitigated, Order.co increased coverage of eligible on-demand workloads without sacrificing flexibility or performance.

The result was a 57% reduction in AWS costs.

The savings were not the result of deleting infrastructure. They came from restructuring how predictable workloads were purchased and enabling flexibility while capturing deeper discounts.

Secureframe faced a related challenge as it scaled its automated compliance platform. AWS usage across EC2, RDS, and OpenSearch increased rapidly to support customer growth and onboarding. The infrastructure was necessary to meet demand, but cloud costs began to rise alongside expansion.

Traditional AWS Savings Plans required long-term commitments of up to three years. For a fast-growing company, that level of lock-in introduced meaningful risk and limited flexibility.

As a result, a substantial portion of on-demand usage remained uncovered by long-term commitments, despite the availability of significant discounts.

Secureframe partnered with Usage.ai to implement Assured Commitments. This approach provided savings comparable to a 3-year AWS Savings Plan while reducing commitment size dramatically and limiting financial obligation to the first month. Through the Guaranteed Buyback program, underutilized commitments could be sold back, preserving flexibility.

This structure allowed Secureframe to significantly expand coverage of eligible on-demand workloads while maintaining agility.

The outcome was a 50%+ reduction in AWS costs.

This demonstrates that disciplined commitment expansion, when paired with downside protection, can unlock substantial savings without restricting growth or operational flexibility.

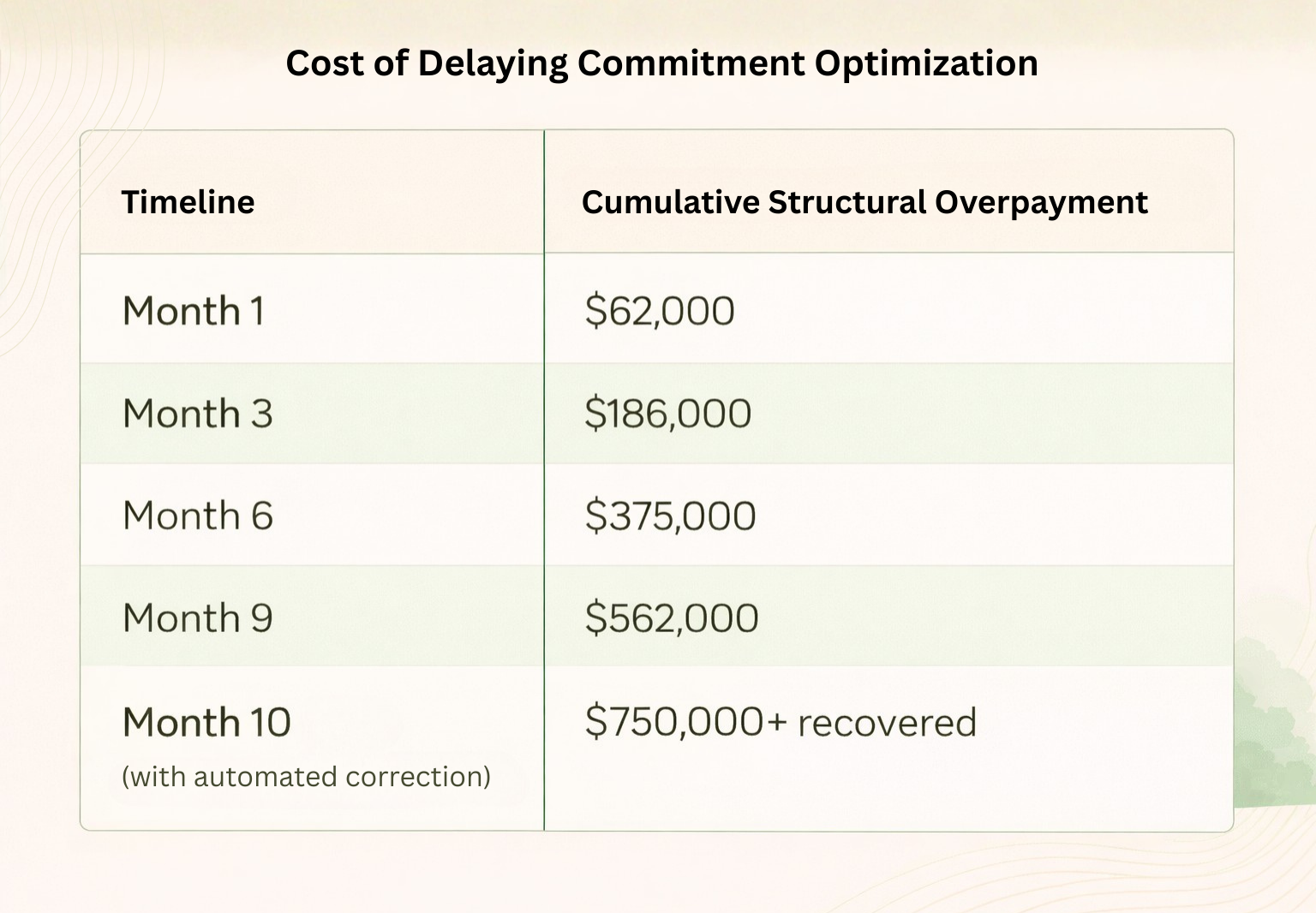

By the time most teams begin actively correcting commitment coverage, structural overpayment has already accumulated.

For example, in a $5M annual cloud environment, even a conservative 15% structural gap represents $750,000 per year. That gap doesn’t appear all at once. It builds month by month when predictable workloads remain on on-demand pricing.

If commitment alignment is delayed, the progression typically looks like this:

These numbers reflect steady-state workloads that simply weren’t aligned with discounted commitment instruments quickly enough.

Quarterly review cycles are often the bottleneck. When commitment decisions are revisited every 90 days, coverage gaps remain unresolved for entire billing cycles. In fast-moving environments, workload drift during those gaps compounds the problem.

A 10% coverage shortfall on a $5M cloud bill represents $500,000 in annualized exposure, which is more than $40,000 per month. Left uncorrected, that exposure becomes recurring structural overpayment.

So, when it comes to cloud cost optimization, cadence determines realized savings.

Cloud cost optimization best practices are often presented as checklists. But when you step back, the pattern is simpler and more structural than that.

Cloud waste is rarely just the result of unused infrastructure. More often, it comes from predictable workloads running on the wrong pricing model. But sustained savings come from aligning baseline demand with discounted commitments and maintaining that alignment continuously.

The organizations that achieve 30–50% reductions do not treat optimization as a quarterly initiative. They treat it as an ongoing financial discipline. Coverage is modeled against sustained usage, not peak optimism. Commitments are adjusted as workloads evolve, and structural pricing gaps are corrected quickly instead of compounding over time.

That level of consistency is difficult to maintain manually, especially in multi-cloud environments where usage shifts daily. This is the gap Usage.ai is designed to close. By continuously analyzing usage across AWS, Azure, and GCP, refreshing commitment recommendations on a daily cadence, and automating execution within defined coverage targets, Usage.ai turns cloud cost optimization from a reactive exercise into a controlled system.

Cloud infrastructure will continue to grow. The question is whether it grows on on-demand pricing or disciplined commitment coverage.

The difference is measurable.

Sign up to Usage.ai and connect your cloud account in minutes. See what disciplined commitment coverage would save you before another billing cycle compounds the gap.

Share this post