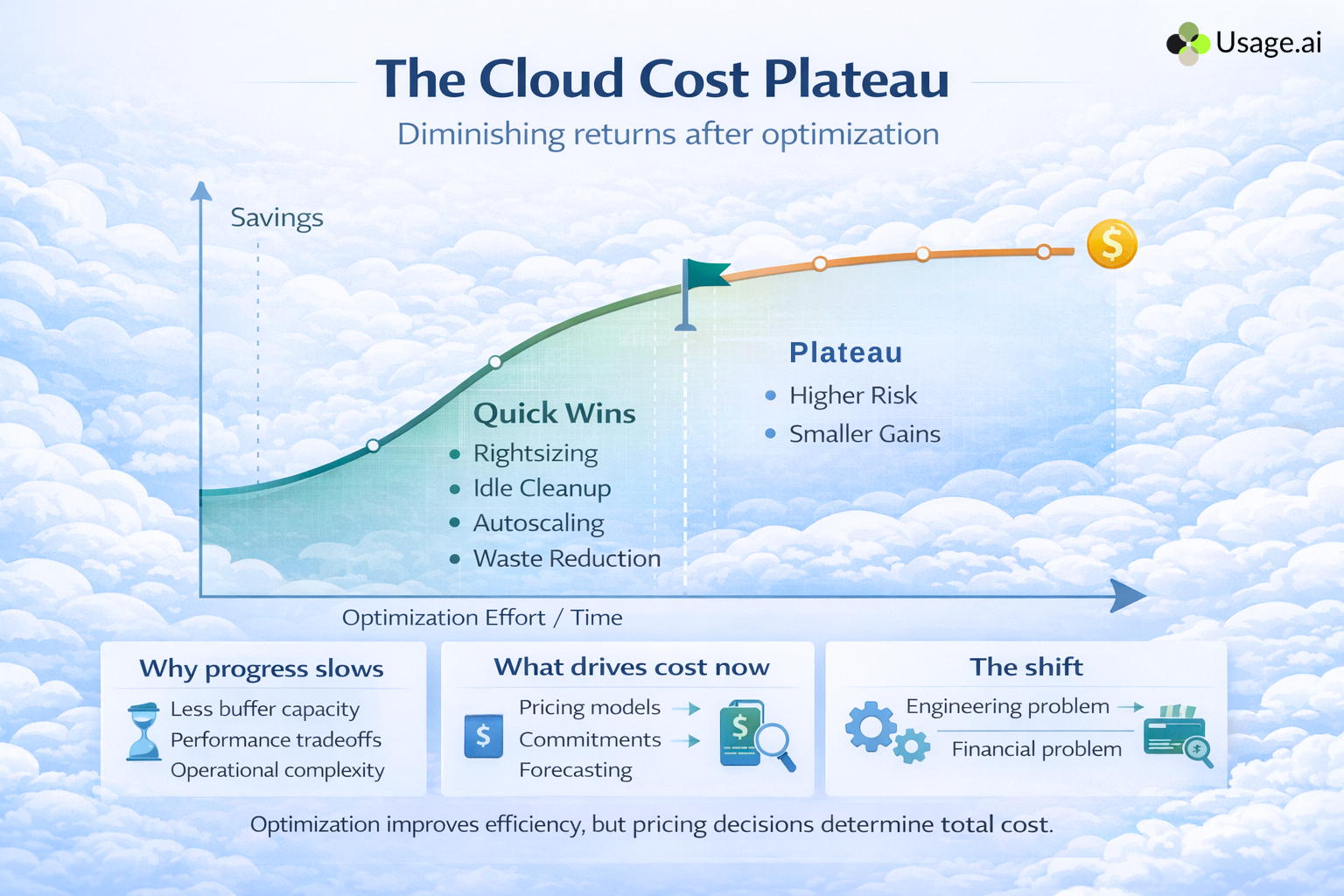

Cloud resource optimization is usually the first place teams look when cloud costs start climbing. You rightsize instances, clean up idle resources, tune autoscaling policies, and improve utilization across your infrastructure. In many cases, this work delivers quick wins, sometimes cutting waste by 20–30% in the first few months.

But then the savings slow down.

Despite ongoing cloud performance optimization and increasingly efficient architectures, many engineering and FinOps teams find themselves asking the same question: Why are cloud costs still so high if our resources are optimized? The uncomfortable answer is that cloud resource optimization focuses on how efficiently you run infrastructure, not how cloud pricing actually works.

Modern cloud bills are driven less by raw utilization and more by long-term pricing decisions. Things like capacity planning, demand predictability, and whether workloads are covered by discounted commitments. Optimizing servers and workloads improves efficiency, but it doesn’t automatically translate into lower unit prices. In fact, highly optimized environments often expose a new problem: teams are running lean infrastructure at full on-demand rates because committing feels too risky.

This is where most cloud optimization guidance stops short. It treats cloud efficiency as a purely technical problem and avoids the financial tradeoffs that ultimately determine costs. As a result, teams hit a plateau where further cloud server optimization yields diminishing returns, even as spend continues to grow.

In this article, we’ll explore why cloud resource optimization alone doesn’t fix cloud costs and what’s missing between efficient infrastructure and real, sustained savings.

Cloud resource optimization is the practice of aligning cloud infrastructure usage with actual workload demand. In practical terms, it focuses on making sure you’re not paying for more compute, storage, or networking capacity than your applications need at any given time.

Most cloud optimization guidance centers on a familiar set of actions:

These practices are foundational. Without them, cloud efficiency will suffer, performance will degrade, and costs will spiral quickly. For teams early in their cloud journey, cloud resource optimization can deliver meaningful savings by eliminating low-hanging fruit and correcting years of accumulated inefficiency.

However, this definition also reveals an important limitation. Cloud resource optimization is fundamentally operational, not economic. It answers the question, “Are we using what we’re paying for efficiently?” but not, “Are we paying the lowest possible price for what we use?”

Optimizing resources improves utilization, not pricing. It doesn’t change whether workloads are billed at on-demand rates or discounted commitment rates. It doesn’t address how long capacity is locked in, how demand volatility affects financial exposure, or how future growth impacts costs.

That distinction is subtle, but it’s the reason many well-optimized environments still struggle with rising cloud bills. Once waste is removed, the remaining cost drivers sit outside the scope of resource optimization entirely.

In the early stages, cloud resource optimization feels incredibly effective. Rightsizing oversized instances, deleting unused resources, and tightening autoscaling policies can quickly eliminate obvious waste. For many teams, this phase produces the most dramatic cost reductions they’ll ever see.

Then progress slows.

Once the most visible inefficiencies are addressed, further optimization becomes harder, riskier, and far less rewarding. Each additional percentage point of cloud efficiency requires disproportionately more effort and often introduces new tradeoffs around performance, reliability, and operational complexity.

This is the cloud cost plateau. It’s the point where cloud resource optimization stops delivering linear savings and starts colliding with real-world constraints.

One reason is that highly optimized environments leave very little slack. Aggressive rightsizing reduces buffer capacity, making workloads more sensitive to traffic spikes or unpredictable demand. Autoscaling can help, but it adds latency, operational overhead, and cost variability. At some point, teams intentionally stop optimizing further to protect performance and stability.

Another reason is organizational. As infrastructure becomes leaner, decisions shift away from engineering optimization and toward financial planning. Cloud costs are no longer driven by waste, but by the price paid per unit of capacity. Yet most optimization efforts are still focused on reducing usage rather than improving pricing.

This is where cloud efficiency gains start to decouple from actual savings. Teams may be running infrastructure at high utilization with strong performance metrics, but still paying on-demand rates for the majority of their usage.

At the plateau, cloud costs are no longer an engineering problem alone. They become a financial optimization problem, governed by forecasting accuracy, risk tolerance, and long-term pricing decisions. And those forces sit outside the reach of traditional cloud resource optimization.

Also read: Cloud Cost Analysis: How to Measure, Reduce, and Optimize Spend

Cloud performance optimization is often treated as the natural extension of cloud resource optimization. By tuning applications, improving request handling, and ensuring workloads scale efficiently, teams can serve more traffic with fewer resources. In theory, better performance should translate into lower costs.

In practice, that translation is incomplete.

Performance optimization primarily affects how efficiently applications consume infrastructure, not how that infrastructure is priced. Faster response times, lower latency, and improved throughput can reduce the total amount of compute required, but they don’t change the billing model applied to that compute. If workloads are still running on on-demand pricing, improved performance simply means you are using fewer resources at the same high unit cost.

This creates a paradox. As cloud performance optimization makes workloads more predictable and stable, it actually strengthens the case for discounted pricing through long-term commitments. Usage patterns become clearer, baselines flatten, and variability decreases, exactly the conditions cloud providers reward with lower rates.

Yet many teams stop short of taking that next step.

The reason is risk. Performance optimization improves confidence in current behavior, but it does nothing to protect against future change. A sudden traffic drop, product shift, or architectural decision can quickly invalidate assumptions that once looked safe. Without a buffer against downside risk, committing to discounted pricing still feels dangerous, even in a highly optimized environment.

As a result, organizations often run well-performing, efficient systems while paying on-demand prices for the majority of their cloud usage. Performance gains reduce infrastructure needs, but savings remain capped because pricing decisions remain conservative.

Cloud performance optimization helps teams do more with less. It does not, on its own, help teams pay less for what they consistently use. That gap is where cloud costs continue to accumulate, despite technically sound, high-performing systems.

Also read: How to Cut Cloud Spend Without Taking Commitment Risk

Capacity planning under uncertainty becomes less about optimizing averages and more about managing downside risk. Without a way to absorb forecasting errors, teams are forced to choose between two imperfect outcomes: accept higher long-term cloud costs or take on commitment risk they can’t easily reverse.

This is the gap cloud resource optimization can’t fill. Optimization improves efficiency within existing constraints, but it doesn’t resolve the economic impact of unpredictable demand. Until capacity planning accounts for uncertainty—not just utilization—cloud costs will continue to rise, even in highly optimized environments.with predictable cloud costs. In theory, teams forecast future demand, size baseline capacity accordingly, and make informed decisions about long-term usage. In practice, capacity planning is where many cloud cost strategies start to unravel.

The core challenge is uncertainty. Cloud demand rarely behaves in straight lines. Traffic fluctuates, products evolve, and architectures change faster than long-term forecasts can adapt. Even organizations with mature FinOps practices struggle to predict usage accurately months in advance.

Most cloud optimization guidance oversimplifies this reality. Capacity planning is often reduced to generic advice like “analyze historical trends” or “forecast conservatively,” without addressing what happens when forecasts fail.

When forecasts are wrong, the consequences are predictable:

Because of these risks, many teams respond by intentionally under-planning capacity. They keep more workloads on on-demand pricing, even when usage appears stable, because the financial downside of over-committing feels harder to justify than paying slightly more each month.

This conservative approach has real tradeoffs:

This is the gap cloud resource optimization can’t fill. Optimization improves efficiency within existing constraints, but it doesn’t resolve the economic impact of unpredictable demand. Until capacity planning accounts for uncertainty, cloud costs will continue to rise, even in highly optimized environments.

Cloud server optimization focuses on improving how individual servers, virtual machines, or containers consume resources. This includes tuning instance sizes, optimizing CPU and memory allocation, consolidating workloads, and ensuring servers aren’t overprovisioned relative to demand. When done well, it leads to higher utilization and more efficient infrastructure.

But server optimization operates entirely at the infrastructure layer and not the pricing layer. You can perfectly optimize cloud servers and still pay the highest possible rate for them. That’s because cloud pricing is largely decoupled from how well a server is tuned. Whether a workload runs at 30% utilization or 80% utilization, on-demand pricing charges the same unit rate. Optimization reduces how many servers you need, but it doesn’t reduce what each unit of capacity costs.

This creates a common misconception that better utilization automatically equals better savings. In reality, utilization only determines how much capacity you consume, and not how cheaply that capacity is billed.

Cloud providers reserve their deepest discounts for committed usage. These discounts aren’t based on how optimized a server is, but on how confidently a customer can commit to using capacity over time.

As a result, many teams end up in an inefficient middle ground:

This is where pricing risk becomes the dominant cost driver.

Also read: Why Cloud Cost Management Keeps Failing

Cloud providers offer their largest discounts through usage commitments. Whether it’s committed spend, reserved capacity, or long-term usage agreements, the economic model is consistent: the more predictably you can commit to future usage, the lower your unit costs become. For stable workloads, these discounts can dramatically outperform any savings achieved through resource optimization alone.

But commitments come with a catch. If usage drops below what you committed to, you don’t just lose flexibility, you lose money.

This is where commitment risk enters the picture. It’s the risk that future usage won’t match today’s assumptions, leaving teams locked into paying for capacity they no longer need. That risk doesn’t show up in CPU graphs or utilization dashboards, but it dominates financial decision-making behind the scenes.

Because of this, many organizations treat commitments as something to minimize rather than optimize. Even when workloads are stable and well-understood, teams hesitate to increase coverage because the downside feels asymmetric. If forecasts are correct, savings accrue gradually. If forecasts are wrong, the financial impact is immediate and difficult to reverse.

This fear shapes cloud cost outcomes more than most teams realize:

The result is a persistent gap between theoretical savings and realized savings. Cloud resource optimization improves readiness for commitments, but it doesn’t address the core blocker: who absorbs the cost when usage changes.

Until commitment risk is acknowledged and managed explicitly, cloud costs will continue to reflect worst-case assumptions. This is why many well-optimized environments still overpay and why optimization alone can’t deliver sustained cost control.

Traditional cloud resource optimization follows a simple logic: reduce waste, improve utilization, and keep infrastructure flexible. This approach minimizes operational risk, but it also anchors pricing decisions to worst-case assumptions. Because there’s no protection against downside scenarios, teams plan for what might happen instead of what usually happens.

Risk-adjusted optimization takes a different approach. It treats cloud efficiency, capacity planning, and pricing decisions as part of a single system.

The distinction looks like this:

This mental model shift is critical. Instead of asking, “Are we confident enough to commit?” teams ask, “What happens if we’re wrong?” When downside risk is managed, optimization can extend beyond infrastructure into pricing strategy.

In a risk-adjusted framework, cloud resource optimization becomes an enabler rather than the finish line. Efficient infrastructure provides the data and stability needed for better financial decisions, while risk management mechanisms absorb the impact of change.

Also read: GCP Cost Optimization Best Practices

As long as teams are forced to choose between flexibility and savings, they default to the safer option and continue paying higher on-demand rates.

This is the gap that optimization alone can’t close, and it’s exactly where Usage.ai enters the equation. Rather than asking teams to choose between flexibility and savings, Usage.ai is built around the idea of risk-adjusted optimization. It assumes that forecasting will never be perfect and designs the cost strategy around that reality.

Usage.ai builds on top of the work teams already do:

Instead of stopping there, Usage.ai focuses on how that optimized usage is paid for.

The platform continuously analyzes cloud usage across AWS, Azure, and GCP to identify where discounted commitments make sense and just as importantly, where they introduce too much risk. Recommendations refresh every 24 hours, allowing teams to adapt quickly as usage patterns change.

But the real shift happens in how commitment risk is handled.

Traditional cloud commitments force an all-or-nothing decision. Either you lock usage in for one to three years to access discounts, or you stay flexible and pay on-demand rates. This binary choice is what keeps many teams under-committed, even when their workloads are stable.

Usage.ai’s Flex Commitments change that dynamic.

Flex Commitments are designed to deliver savings similar to Savings Plans or Reserved Instances, but without the same level of long-term rigidity. They allow teams to increase coverage incrementally, align commitments more closely with real usage, and adjust as conditions evolve, without being trapped by a single forecast made months earlier.

The benefit is teams can unlock deeper discounts earlier, rather than waiting for “perfect certainty” that never arrives.

The biggest blocker to higher commitment coverage is the fear of underutilization. When usage drops, traditional commitments leave customers absorbing the full financial impact.

Usage.ai addresses this directly with guaranteed cashback assurance.

If committed usage isn’t fully consumed, Usage.ai returns real cash back to the customer according to agreed terms. This mechanism fundamentally changes the risk equation:

Instead of building cloud cost strategies around worst-case scenarios, teams can plan around expected usage with protection when reality deviates.

When cloud resource optimization, capacity planning, and risk-adjusted commitments work together, cloud costs behave differently:

This is why cloud resource optimization alone doesn’t fix cloud costs. Optimization prepares the ground, but pricing strategy determines the outcome. Usage.ai closes the gap between efficient infrastructure and lower cloud bills by automatically managing commitment risk.

If you want to see how much more you could save by safely increasing commitment coverage, get your personalized cloud savings preview. Sign up now for free.

1. Is cloud resource optimization enough to reduce cloud costs?

No. Cloud resource optimization removes waste and improves utilization, but it doesn’t change how usage is priced. Once inefficiencies are gone, cloud costs are driven by pricing models and commitment decisions.

2. What’s the difference between cloud efficiency and cloud cost savings?

Cloud efficiency measures how well resources are used. Cloud cost savings depend on how that usage is billed. An environment can be highly efficient and still expensive if most workloads run on on-demand pricing instead of discounted commitments.

3. Why do companies avoid cloud commitments even when usage is stable?

Because of risk. If usage drops, traditional commitments can leave teams paying for unused capacity. That downside often outweighs potential savings, so organizations stay under-committed and continue paying higher on-demand rates.

4. How does cloud capacity planning affect cloud costs?

Capacity planning determines expected baseline usage. Inaccurate forecasts force teams to choose between overpaying on-demand or risking unused commitments. Because demand is uncertain, many teams plan conservatively, which limits access to discounted pricing.

5. How does Usage.ai reduce cloud costs beyond optimization?

Usage.ai helps teams safely increase commitment coverage using Flex Commitments and real cashback protection. If usage drops, customers receive cash back, allowing deeper discounts without taking on full commitment risk.

6. What’s the biggest mistake teams make with cloud optimization?

Treating cloud optimization as purely technical. While efficiency matters, the largest savings come from pricing strategy. Ignoring commitment risk and coverage decisions often leaves significant savings unrealized, even in well-optimized environments.

Share this post